This is the last post in the series about updateable views. The previous posts (Updateable views – how to use them and INSTEAD OF triggers on views) described the mechanics of the views and triggers that you can use. I haven’t answered yet to question where views and triggers can be useful?

The common issue that developers meet when developing SSIS packs is that there is not ‘built-in’ destination transformation that would insert rows when they don’t exist in the destination table and update rows that exist. Usually developers use a lookup and split the dataflow to OLEDB destination and OLEDB command. Adam Haines (blog) wrote a very nice post about using INSTEAD OF triggers on views to improve this solution.

The other scenario I see quite interesting is logging rows that fail to be inserted into destination table. Usually failure occurs because of constraint violations – like invalid value of the column on which there is a CHECK constraint or NULL where it shouldn’t be.

BCP allows for output of invalid rows into a separate file. The same sometimes is used in SSIS solutions. You can create File destination to save rows which fail to be input into the table. I see a few issues with this approach. If you use CSV file, you loose distinction between NULL and empty fields. If you use raw binary file – you can’t really investigate its contents. There is also all this hassle with destinations, file locations, disk space, access etc.

I’ve been working with SSIS for a few years and in the past we came up with idea to create custom component, written in C# that would be used in script components of SSIS at the end of data flows, connected with final destinations with red arrows. This worked pretty well. In ProcessInputRow handler the row would be passed to the component which would create XML from it using reflection. The XML would subsequently be saved in a table in database, making it easy to query and troubleshoot.

Well, this approach has a few drawbacks as well: you need to deploy and maintain component’s code, the error handling is processed row by row, and reflection does not improve performance, especially when upstream data were totally wrong and all rows had to be redirected to the audit output.

I recently began new project, and had to approach the question of audit failed rows once more. I didn’t want to use the component due to these problems I wrote above. I realized that I can use SQL Server to convert the data from the pack into XML. Let’s look how it works.

First, let’s create testing environment. We will create package that moves data from one database to another. The following snippet creates source and destination databases and table that contains the source data.

1: use master

2: go

3: create database SSISTestSourceDB

4: go

5: create database SSISTestDestinationDB

6: go

7: 8: use SSISTestSourceDB

9: go

10: create table tSource(Col1 int, Col2 int null, Col3 varchar(20), Col4 datetime)

11: go

12: insert tSource(Col1, Col2, Col3, Col4)13: values

14: (1, 1, 'Value1', CURRENT_TIMESTAMP),

15: (2, null, 'Value1', CURRENT_TIMESTAMP), --for null value error

16: (3, 3, 'DB', CURRENT_TIMESTAMP), --for min length check constraint violation

17: (4, 4, 'Value1', CURRENT_TIMESTAMP),

18: (5, 5, 'Value1', CURRENT_TIMESTAMP),

19: (6, 100, 'Value1', CURRENT_TIMESTAMP), --for out of range check constraint violation

20: (7, 7, 'Value1', CURRENT_TIMESTAMP),

21: (8, 8, 'Value1', CURRENT_TIMESTAMP),

22: (9, 9, 'Value1', CURRENT_TIMESTAMP),

23: (10, 10, 'Value1', CURRENT_TIMESTAMP), --two tens for pk violation on destination

24: (10, 11, 'Value1', CURRENT_TIMESTAMP),

25: (11, 12, 'Value1', CURRENT_TIMESTAMP)

26: 27: go

Now let’s create table in the destination database:

1: use SSISTestDestinationDB

2: go

3: create schema Staging authorization dbo

4: go

5: create table Staging.tDestination

6: (7: Col1 int not null constraint PK__1 primary key clustered,

8: Col2 int not null constraint CHK__Col2_Range check (Col2 < 30),

9: Col3 varchar(20) not null constraint CHK__MinLenGreaterThan2 check (len(Col3) > 2),

10: Col4 datetime not null

11: ) 12: goThe Audit table will store the audit data:

1: --table that will accept errors from the pack

2: create table Audit.tAuditETLErrors

3: (4: [ErrorCode] [bigint] NULL,

5: [ErrorColumn] [bigint] NULL,

6: [ErrorDescription] [nvarchar](1000) NULL,

7: [ErrorData] [xml] NULL, --actual row data

8: [UpdateDate] [datetime] not null constraint DF_UpdateDate1 default (getdate())

9: ) 10: goAs you see, the table is pretty simple: ErrorCode and ErrorColumn are derived directly from data flow in the SSIS package, ErrorDescription is added to retrieve more meaningful error description and ErrorData is XML column that contains serialized audit row.

Now the main part of the idea: a view with an INSTEAD OF INSERT trigger on it.

1: --create error handling view and trigger

2: create view Audit.vDestinationSSISError

3: as

4: select convert(bigint, 0) ErrorCode,

5: convert(bigint, 0) ErrorColumn,

6: convert(nvarchar(1000), N'') ErrorDescription,

7: dst.* 8: from Staging.tDestination dst

9: where 1 = 0 --this view doesn't have to return any data

10: go 11: create trigger Audit.trgins_vDestinationSSISError on Audit.vDestinationSSISError 12: instead of insert 13: as 14: begin 15: set nocount on 16: --insert new row to audit table. actual data are converted to XML 17: insert Audit.tAuditETLErrors (ErrorCode, ErrorColumn, ErrorDescription, ErrorData, UpdateDate) 18: select ErrorCode, ErrorColumn, ErrorDescription, 19: (select * from inserted 20: for xml raw, elements xsinil, root('etl_error')), current_timestamp

21: from inserted

22: end

23: goThe view doesn’t return any data, because in this case it is used only for trigger. The view has to expose ErrorCode, ErrorColumn and ErrorDescription columns, plus all columns of the destination table if you want to audit all columns.

The trigger uses select… for xml query to serialize incoming data into xml stream that is inserted eventually to ErrorData column.

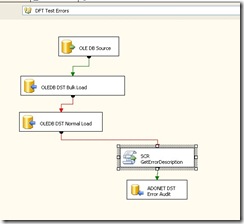

The package is built in pretty simple way. There is OLEDB source, OLEDB destination – using ‘fast’ bulk load option. Then there is OLEDB ‘Normal’ destination – for batches which were rolled back because of errors in the ‘Fast’ destination.

Then, for rows that didn’t make it to the destination table, redirection to additional destination is made, to the view destination:

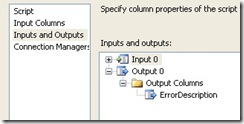

The script component before the final destination adds ErrorDescription to the data flow:

There’s a catch: you better use ADO.NET destination in the package because OLEDB destination is unaware of the triggers on the views. While you can configure OLEDB destination to use view, the rows will fail to be inserted because of the same reasons they weren’t inserted in the fist place. You can solve it by creating a table with exactly the same schema as the destination table but without any constraints. This approach is a bit awkward and you have to maintain tables in sync. I found it easier to use ADO.NET destination. If you use connection string stored in package configurations, you just have to be sure that you use connection string for .NET for this destination, otherwise it will not work.

You map the columns the normal way:

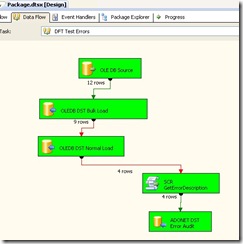

This is what you’ll see when you run the package:

From 12 rows read from the source, three were inserted using bulk insert, and nine went to the ‘Slow’ destination. There, rows were inserted row by row, and rows which failed were redirected to the error output – you see there were four of them. Then the script component adds ErrorDescription column and rows are inserted into the view. When you select from the Audit.tAuditETLErrors table you will see all four of them:

I find this setup pretty useful, I wonder what do you think.

I attach the test script and the test SSIS project so you can straight go to the fun part.

No comments:

Post a Comment